“Work smart, not hard” has become one of the most repeated pieces of professional advice. It usually carries an implicit promise: there exists a clever shortcut that will save you time, energy, or discomfort.

Sometimes, that promise is true. Automation, better tools, experience, and process improvements genuinely reduce effort. But the mistake lies in assuming that smart work always means less work.

In many situations—especially early in one’s career or when entering a new domain—there is no less-effort path. There is only the work.

The Problem With the Shortcut Definition

The popular interpretation of smart work is task-centric:

- Can I finish this faster?

- Can I avoid this step?

- Can I optimize this process?

These are valid questions, but they are also narrow. They assume that the purpose of work is merely to complete a task.

But work, especially meaningful work, is rarely just about completion. It is also about:

- Skill acquisition

- Pattern recognition

- Judgment formation

- Decision-making under uncertainty

None of these emerge from shortcuts alone.

A More Honest Definition of Smart Work

A more realistic definition of smart work is this: “Smart work is effort that compounds.“

It is not about how little energy you expend today, but about whether today’s effort takes you to a higher baseline tomorrow.

Sometimes, smart work looks like:

- Doing the same task repeatedly until you understand it deeply

- Investing extra time to learn why something works, not just how

- Choosing a slower path because it builds transferable skills

In this sense, smart work is not the opposite of hard work. It is “hard work with direction.”

Smart work becomes clearer when we move away from professions where “optimization” is obvious and look at roles where effort cannot easily be reduced.

Take a security guard or a gatekeeper. There is no faster way to “guard” a gate, no clever hack to replace vigilance. Yet smart work exists even here: not in doing less, but in seeing more. Over time, a good guard begins to recognize patterns: who belongs, what normal looks like, when something feels off. The work does not change, but the depth of perception does.

The same is true for a factory worker on an assembly line. While the motion may be repetitive, understanding the machine, anticipating faults, noticing inefficiencies, or maintaining consistency under pressure transforms the worker from someone who merely performs a task into someone who masters a process.

A junior software engineer may write code that works, but smart work lies in learning how systems scale, why decisions were made, how failures propagate —skills that are invisible in the short term but decisive over a career.

Even in professions like teaching or nursing, where care and presence cannot be optimized away, smart work emerges through experience: reading people better, responding calmly under stress, knowing when to intervene and when to step back.

Across these roles, smart work is not about reducing effort; it is about accumulating judgment. It is the quiet, often unnoticed process of becoming better at the same work—until one is ready, naturally, for the next level.

Progress, Not Comfort, Is the Metric

Smart work should be evaluated not by immediate ease, but by progression:

- Are you better at this than you were last month?

- Are you faster because you’re skilled, not because you skipped steps?

- Are you moving toward more complex, meaningful problems?

When someone becomes exceptionally good at a task, speed follows naturally. At that point, finishing faster isn’t the goal; it’s a side effect. The real win is that you’re now ready for the next layer of responsibility.

That transition from execution to ownership, from instruction to intuition, is the truest marker of smart work.

When Smart Work Looks Like Hard Work

There are phases in life where smart work is indistinguishable from hard work:

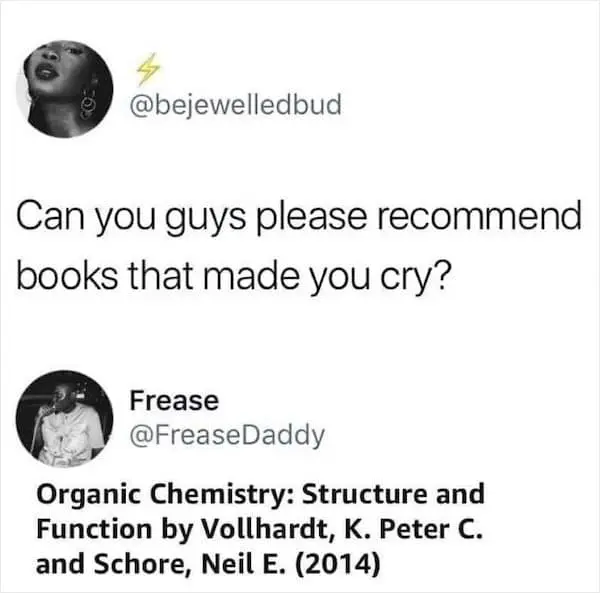

- Learning a new discipline

- Building foundational skills

- Starting something from scratch

In these phases, avoiding effort is not intelligence, it is avoidance.

Smart work here is about endurance with awareness:

- Paying attention to feedback

- Refining technique

- Building mental models

- Letting effort shape competence

Work as a Vehicle, Not a Burden

Perhaps the biggest shift in thinking is this:

Smart work is not about escaping work, it is about using work as a vehucle for:

- Growth

- Self-discovery

- Capability expansion

- Earning optionality

When seen this way, effort is not something to minimize blindly, but something to invest wisely.

In Closing

Smart work is not the art of doing less.

It is the discipline of ensuring that whatever you do today moves you forward.

Sometimes that means finding a smarter method.

Sometimes it means doing the work so well that the next step reveals itself.

Both are smart.

Avoiding effort, however, rarely is.